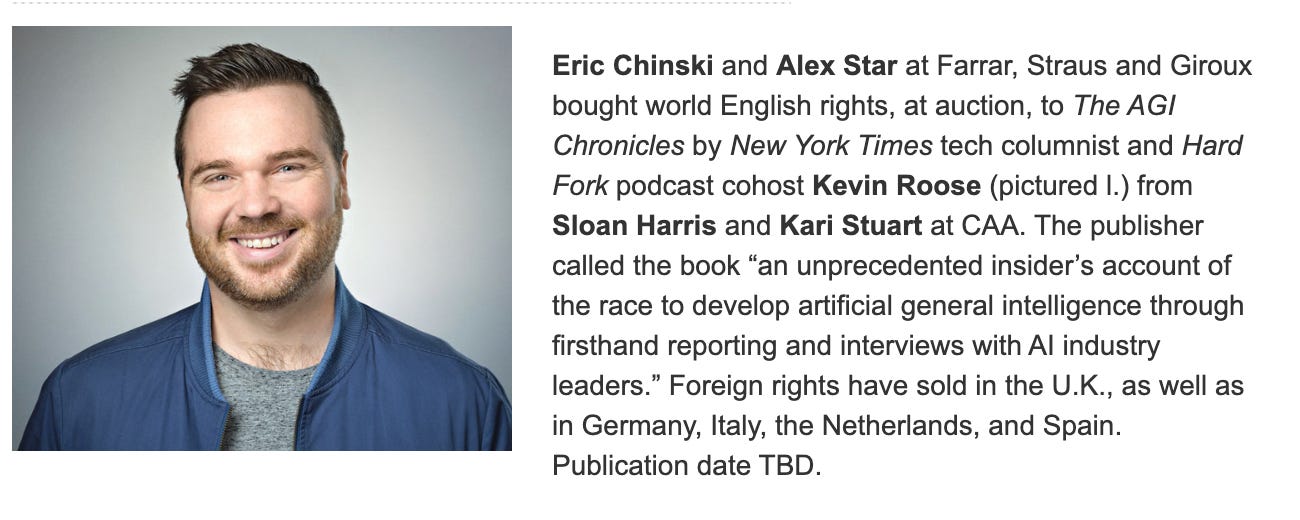

TL;DR: I’m writing The AGI Chronicles: a real‑time, inside story of the race to build artificial general intelligence. Sign up to this newsletter for field notes and early access. And if you have stories to share, please get in touch!

For the last few years (ever since Bing Sydney tried to break up my marriage, basically) I’ve been researching what you could call the “AGI scene” — the small, influential cluster of people in and around San Francisco who are trying to build artificial general intelligence, and who think that smarter-than-human AI is not only possible but imminent. (Some people are claiming AGI is already here, but for the most part the people I’m talking to believe that human-level AI is somewhere between 6 months and a few years away. The more conservative ones think we’ll get it by 2030.)

As I’ve spent time in this scene, I’ve noticed a pattern. Every so often, someone will tell me a crazy story, or relay some notable AI development they’ve witnessed. Maybe they were overseeing a training run when a new capability emerged. Maybe they heard AI executives arguing over some new research direction at a party. Maybe they were in the room when a group of policymakers saw a private demo and grasped the implications of AGI for the first time.

I listen to them, and I think: Is anybody writing this down?

Mostly, the answer appears to be no. The lab employees are too busy to keep detailed notes or diaries. The executives are too worried about lawsuits and leaks. Slack and Signal — where most of the important AI conversations happen — are often set to self-delete. Everyone is talking about AI, but the real insiders are saying less than ever.

As a journalist, this stresses me out. I hate the idea that if building AGI is going to be one of the most consequential technological projects in history — and I believe it may be — we may have little to no record of how it happened.

There are safety-related reasons to support transparency in AI. But there are also more practical reasons to want this stuff preserved for posterity. Think how poor our collective understanding would be without informed accounts of our biggest, most ambitious scientific quests. Imagine if we had no contemporaneous records of the Apollo program, or the Manhattan Project. Now imagine if, a decade or two from now, we’re all living in the post-AGI utopia/dystopia/protopia, and all that remains to explain how we got there are broken Twitter threads, LessWrong posts, and some dusty copies of “Situational Awareness.”

I often tell people that being in San Francisco in 2025 feels like living through the Protestant Reformation. We’ve got the manifestos, the warring sects, the charismatic leaders declaring their One True Path. The backdrop for all of this is, of course, a steady drumbeat of AI progress — the models just want to learn, after all — but the social and anthropological fallout is just as interesting as the loss curves. Whether we’re headed into a new Renaissance or hurtling toward disaster, I hope everyone can agree on the value of writing it down.

So, I’m writing it down.

The working title is “The AGI Chronicles,” less because I love the term AGI — which can mean anything these days from “AI that can do some office jobs” to “the godlike shoggoth that will enslave and/or destroy humanity” — than because AGI has become an organizing idea for the AI industry, a rallying cry, an ideological Schelling point. AGI is what some of the world’s smartest, most ambitious people spend their days thinking about. And it’s the thing that, if it’s actually built, could shape the rest of human history.

Here’s the official blurb:

My main competitor here is time. It’s hard to write something relevant about AI on a traditional publishing schedule, and the pace of progress often means that your book is obsolete by the time it’s published. (Trust me on this: I published a book about AI in 2021, a year before ChatGPT came out.)

But I’m working fast, and I’ve got an incredible researcher/collaborator — Jasmine Sun, a writer and formerly a PM at Substack — helping me. We’re busy gathering AGI stories and weaving them into an engaging narrative. (And don’t worry, Hard Fork listeners and NYT readers — I’m still doing my other jobs, too.)

Here’s how you can help: If you are part of the AGI scene (and here I include current and former employees of frontier AI labs, informed outsiders who are evaluating AI systems and studying AI risks, and policymakers writing rules for the AI industry) and you want to share your stories for the book, get in touch. (Signal: @kevin.roose1)

If you have artifacts you’d be willing to share — photos, screenshots, emails, forbidden PDFs, custom paperclips — I’d love to see those, too. (We can discuss terms; I’m open to talking on deep background and have made other privacy-protective arrangements with people who want to stay anonymous.)

And if you’re interested in following along, you can subscribe to this Substack for occasional updates, tidbits from the reporting process, and early access to material from the book.

This will be fun — join me!